Applied AI in Engineering & Computer Science Symposium

2024 Symposium Recap

On Wednesday, Sept. 18, the Applied AI in Engineering & Computer Science Symposium brought together industry leaders and NC State College of Engineering faculty, staff and students for discussion on our world’s AI-driven future and what the college can do now to ensure our students are prepared.

Videos of the keynote speakers and industry roundtable are available below, as are event photos and the 90 posters that were presented by the college’s talented graduate students.

This is an exciting time for the college. Faculty members and graduate students are already using AI in their research, but there is more the college can do to integrate AI into curricula across engineering and computer science disciplines and to prepare students for an ever-shifting technology landscape.

Dean Pfaendtner’s Takeaways

There is no better time for our college to become a preeminent center for applied AI in engineering and computer science.

With our expansion through Engineering North Carolina’s Future, now is the time to hire faculty members with expertise in applying AI to their research and teaching. Our department heads are on board with this strategy. Beyond hiring, we are thinking critically about what the classrooms and labs of the future will look like in our new engineering building.

We can’t yet see the full view of where applied AI will take us. But we do know data-driven engineering is going to be a critical part of the future.

Even the leading experts don’t know exactly how AI will change our industries. What we need to focus on in our College of Engineering is ensuring our students are skilled in data-driven engineering and that they are comfortable navigating an ever-changing technology landscape.

Collaboration and communication are two important skills our students need to develop.Almost every speaker emphasized collaboration and communication as critical skills needed to build and use AI interfaces effectively. Our students need to know how to clearly share ideas, details and context not just while developing AI technologies, but also when talking and listening to end users.

Our faculty, staff and graduate students are already working on the cutting-edge in applying AI to their research.

We have a strong base to build off of, and the breadth and depth of research on display was inspiring. Our faculty members and students are creatively applying AI to so many disciplines. Posters mentioned just about anything you could think of: guide dogs, DNA storage, robotic limbs, historical Arabic documents, yams, autonomous vehicles, materials discovery and so much more.

AI is a key tool in making learning more effective and engaging.

One-on-one tutoring and narrative-centered learning are two of the most effective learning experiences. AI is a powerful tool that can scale up the use of both in classrooms to help students remain engaged and motivated, and I am excited to see our continued developments in this space.

Investors are interested in AI, but a lot of work needs to be done to ensure infrastructure is ready.

There needs to be a massive shift to increase our global data storage capacity so that all industries and academic settings can embrace and integrate AI into their work. As LLMs took off, it put more and more pressure on our power grid and resources. Part of moving toward an AI-driven future is ensuring we have the infrastructure to support it. Our college has been working for more than a decade to develop the power grid of the future through the FREEDM Systems Center.

Videos

Applied AI in Engineering and Computer Science Symposium: Greg Mulholland

Watch now

Applied AI in Engineering and Computer Science Symposium: James Lester

Watch now

Applied AI in Engineering and Computer Science Symposium: Radhika Venkatraman

Watch now

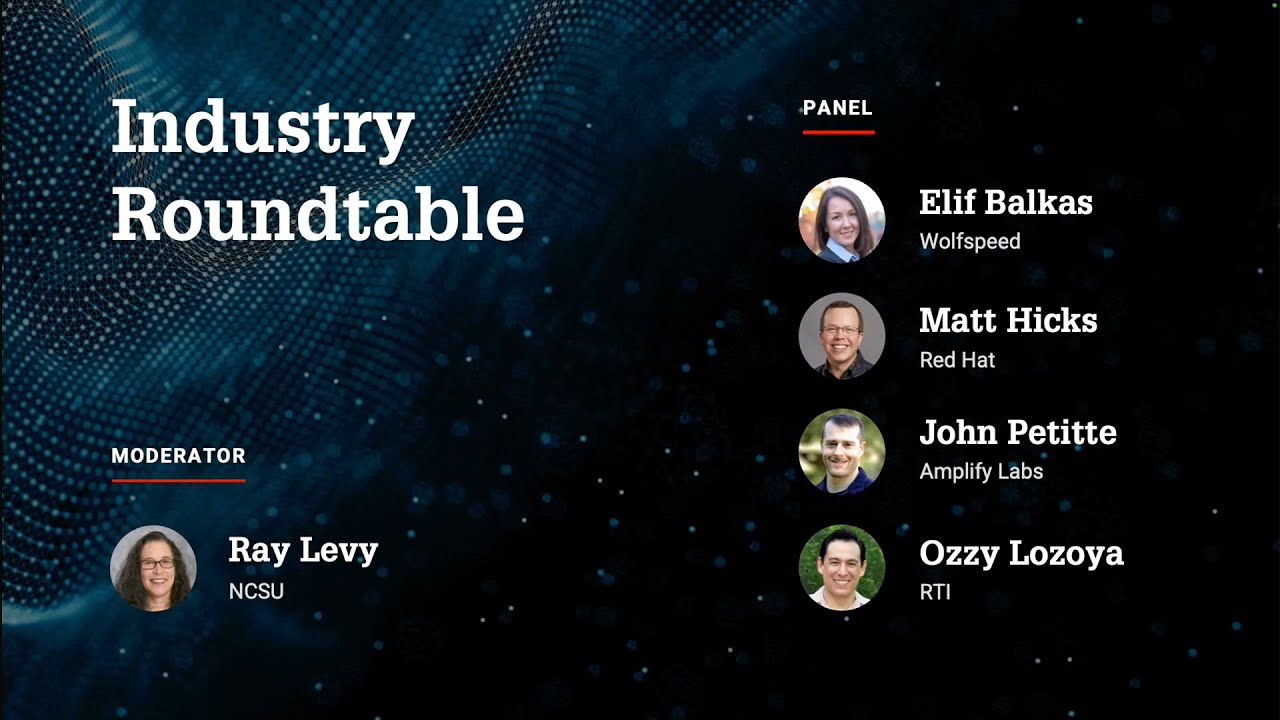

Applied AI in Engineering and Computer Science Symposium: Industry Roundtable

Watch now

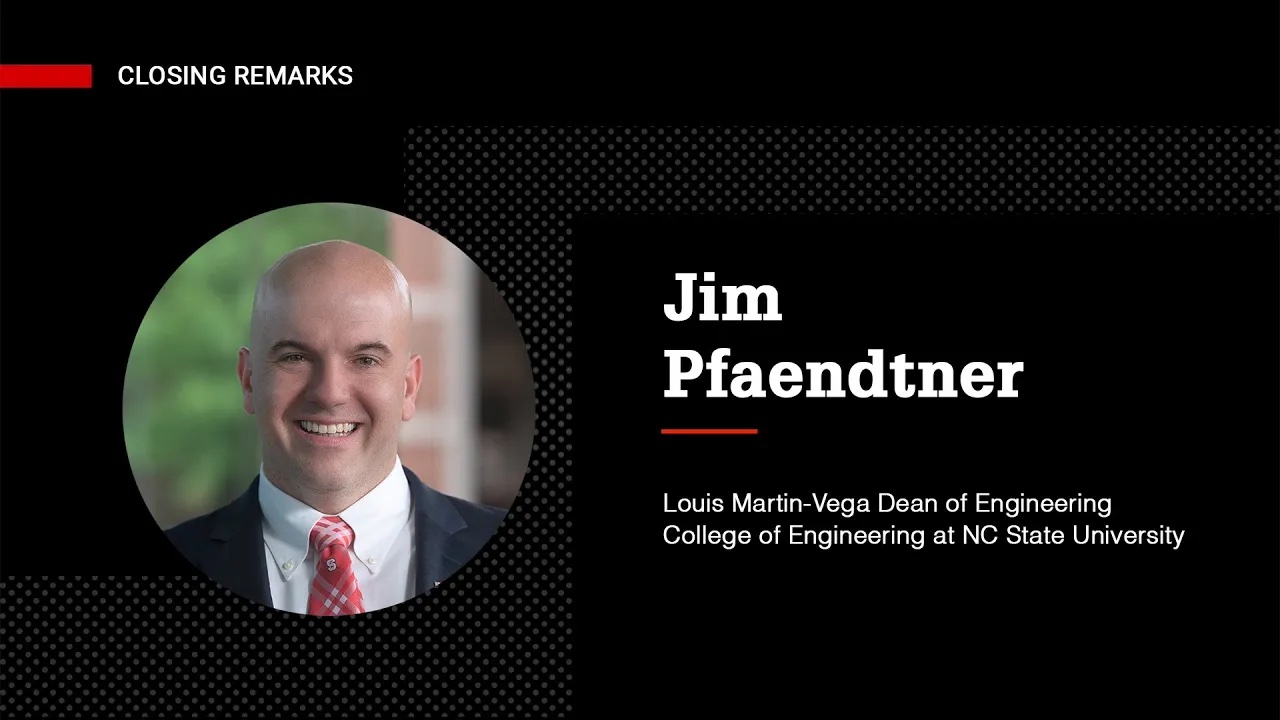

Applied AI in Engineering and Computer Science Symposium: Jim Pfaendtner

Watch now

Photo Gallery

Advisory Committee

Poster Session

The 2024 Applied AI in Engineering & Computer Science Symposium poster session provided College of Engineering faculty, postdoctoral researchers, and graduate students an opportunity to learn more about the breadth of AI-related research currently underway in the college.

We encouraged posters that demonstrate the applications of AI in interdisciplinary areas and cover a broad spectrum of applications and AI tools utilized.

The Graduate Student Poster Session featured College of Engineering graduate students currently enrolled in an engineering program or postdoctoral researcher conducting research in an AI-related field or using AI to advance research.

Click on the following buttons to jump to the academic department of your choice.

Biological and Agricultural Engineering

Shana McDowell

smmcdow2@ncsu.edu

Faculty Sponsor: Daniela Jones

Project Title: The Development of Machine Learning Models for the Assessment of In-season Sweetpotato Root Growth and Crop Yield Estimates

Project Abstract

The Development of Machine Learning Models for the Assessment of In-season Sweetpotato Root Growth and Crop Yield Estimates

Sweetpotatoes vary widely in shape and size, and consumers prefer particular characteristics over others. Optimizing the yield to match consumer preferences would increase grower’s profits and reduce waste. We used machine learning algorithms to highlight the environmental factors, harvesting, planting, and application decisions that contribute to sweetpotato shape and size throughout the growing season. In this process, we also gained a better understanding of the sweetpotato developmental stages through root images of sweetpotatoes as input into computer vision algorithms. We worked with a large sweetpotato grower, packer, and distributor in North Carolina that manages nearly fifteen thousand acres. The provenance of data collected for this study is from several on-farm locations scattered over a geographical area of about 2,500 square miles and from two growing seasons. Sweetpotato weight and length-to-width ratio were predicted using Linear Regression, Support Vector Machine, Random Forest, and XG Boost models. Results show that important predictors of sweetpotato weight and length-to-width ratio are: minimum temperature, soil bulk density, and cumulative precipitation. Elevation, fertilizer application also came up as an important variable for sweetpotato length-to-width ratio. When predicting average weight, the Linear Regression model performed the best (RMSE =4.44, R2 of 0.49) and the Random Forest performed best when predicting Length-to-width ratio (RMSE= 0.44, R2 of 0.74). As part of our translational research efforts, we have also created a dashboard that displays in-field data and supports stakeholder decision-making throughout the growing season to determine an optimal harvest window. Growth estimates obtained from this analysis will be compared to growth measurements of previous growing seasons. This analysis will aid in the development of farm-to-market decision models resulting in a data-driven agri-food supply chain, an optimized crop yield, and a better use of grower’s resources.

Leticia Santos

lsantos2@ncsu.edu

Faculty Sponsor: Daniela Jones

Project Title: Crop residue cover mapping by integrating multi-year satellite imagery and machine learning

Project Abstract

Crop residue cover mapping by integrating multi-year satellite imagery and machine learning

Climate smart agricultural practices focus on farm management techniques that can improve soil health, such as conservation tillage. Conservation tillage leaves crop residue on the soil surface after harvest, which acts as a protective layer against erosion, conserving soil moisture by shielding against extreme heat, and suppressing weeds. Currently, the most accurate method used to assess conservation tillage adoption is through ground-level surveying, which, although effective, is time-consuming, labor-intensive, and not easily scalable. Remote sensing allows ground surveys to be scaled up to estimate crop residue cover over larger extents. Crop residues, characterized by high lignocellulose content, are best differentiated using narrow-band shortwave infrared (SWIR) bands that are available in Worldview 3 (WV3) imagery. While WV3 imagery has high spatial resolution (~ 3.5 m in the SWIR), imagery is not publicly available, is only collected when tasked, and the resultant images only cover a small spatial footprint. In contrast, publicly accessible Landsat and Sentinel imagery covers larger spatial extents, but only have two broad SWIR bands , which cannot measure lignocellulose absorption directly due to their placement. In this study, we used high-accuracy crop residue cover maps derived from WV3 imagery and ground survey data to train machine learning techniques including random forest, gradient boosting, and neural networks to estimate crop residue cover with moderate-resolution Harmonized Landsat Sentinel-2 (HLS) imagery. We calculated various spectral indices derived from HLS bands to estimate fractional crop residue cover on the Delmarva Peninsula, USA for 2017, 2019, 2021 and 2022. All of the machine learning methods tested were effective for predicting crop residue cover, with RMSE lower than 0.1, for all years. The lowest RMSE was obtained with a 3-layer (16 neurons per layer) neural network, using the 2022 year, which is likely related to the close temporal pairing of the HLS and WV3 image acquisitions. This modeling approach will enable region-scalable estimation of crop residue cover that could be used to more accurately monitor conservation tillage adoption on agricultural lands.

Biomedical Engineering

Matt Dausch

medausch@ncsu.edu

Faculty Sponsor: David Lalush

Project Title: Anatomical Standardization of Magnetic Resonance Images of the Knee Using AI-Based Segmentation and Two-Channel Deformable Registration

Project Abstract

Anatomical Standardization of Magnetic Resonance Images of the Knee Using AI-Based Segmentation and Two-Channel Deformable Registration

Introduction

Magnetic resonance (MR) imaging is a common tool used to study Osteoarthritis (OA) due to its high soft tissue contrast and its ability to effectively visualize affected cartilage. Traditionally, MRI-based studies have segmented images into regions of interest (ROIs) or utilized anatomical standardization of a population of images to identify statistically significant ROIs. Anatomical standardization, such as the use of brain templates for functional MRI experiments, is generally preferred if the ROI is not necessarily confined to investigator predefined ROIs. The knee has proved to be slightly difficult to standardize due to greater variations in anatomy between individuals. In this study, we propose a two-channel method of constructing an anatomical knee template utilizing both an anatomical MR knee image and a label image segmenting the tibia and femur via a U-net convolutional neural network to facilitate the goal of a finer grained analysis of the cartilage surface.

Image Acquisition

Anatomical and T1rho MR knee images were taken with a double echo steady state (DESS) sequence and spin-locked T1rho sequence respectively on a 3T Magnetom Prisma (Siemens) during the same scan session.

Bone Labeling

The anatomical DESS knee images were used to label the tibia and femur. This labeling was done via a 55-layer U-net image segmentation network trained on 33 investigator-labeled DESS knee images.

Knee Template

The knee template was created as an anatomical average of 21 DESS uninjured knee images using Advanced Normalization Tools (ANTs) (University of Pennsylvania). The creation of the template utilized two-channel images, the anatomical DESS image and the bone-label image, in a greedy B-spline symmetric image normalization (SyN) deformable registration through five iterations.

Registration of Individual Knee to Two-Channel Template

DESS registration of an individual subject’s knee to the template used ANTs in a three-step transformation process (rigid, affine, deformable) to determine a deformable mapping from its intrinsic coordinate system to the template coordinate system. Four T1rho relaxation time volumes were then warped with the calculated transform to fit the template then used to calculate a quantitative T1rho relaxation time map in the template coordinate system.

Results/Discussion/Conclusion

When the final quantitative T1rho map is fully warped, it mimics the anatomical structure of the template with the DESS image resolution. The most consistent and clean warping of the cartilage is at the bottom of the femoral condyle and the top of the tibial condyle while cartilage located towards the anterior/posterior sides of the femoral condyle resulted in slightly more blurring in the template. T1rho maps were able to be successfully warped in most cases to match the cartilage anatomy to that of the template despite the differences in intra-slice (0.4375mm x 0.4375mm to 0.3125mm x 0.3125mm) and inter-slice (4mm to 1mm) resolution. The method of transforming and averaging a sample set of MR knee images and bone labels created a two-channel knee template allowing for the anatomical standardization of an individual subject’s knee. This standardization ultimately allows for the future goal of creating a finer-grained analysis of the cartilage surface over a population.

Krysten Lambeth

kflambet@ncsu.edu

Faculty Sponsor: Nitin Sharma

Project Title: Dynamic Mode Decomposition with Sonomyography and Electromyography for Modeling Exoskeleton Walking

Project Abstract

Dynamic Mode Decomposition with Sonomyography and Electromyography for Modeling Exoskeleton Walking

The nonlinear dynamics required to model human-exoskeleton walking present a high computational burden that makes real-time model-based optimal control difficult. To address this problem, we use extended dynamic mode decomposition to approximate the Koopman operator. This allows us to derive a linearized model of the human-exoskeleton system. Treadmill data during exoskeleton-assisted walking are collected from participants with and without spinal cord injury and used to generate single-leg walking models. The control inputs to the model include EMG and ultrasound-derived muscle activation metrics in addition to normalized motor currents. Ground reaction force is included during the stance phase as a disturbance. We compare models which use different combinations of EMG and ultrasound-derived metrics and assess the impact of including ground reaction force on model accuracy. Accuracy was quantified by calculating the NRMSE of each state (thigh and shank angles and angular velocities) across the entire data collection period and taking the mean across states.

I-Chieh Lee

ilee5@ncsu.edu

Faculty Sponsor: Helen Huang

Project Title: Effective and Efficient Personalization of Musculoskeletal Models Using Reinforcement Learning to Inform Physical Rehabilitation and Assistive Device Control

Project Abstract

Effective and Efficient Personalization of Musculoskeletal Models Using Reinforcement Learning to Inform Physical Rehabilitation and Assistive Device Control

Musculoskeletal models have been important tools in physical rehabilitation and assistive device control. These models involve many parameters, such as muscle length, maximum contraction force, etc., to operate appropriately. Researchers have built generic musculoskeletal models based on measurements from cadavers or data collected from many individuals without physical disabilities. Due to large inter-human variations, particularly in people with motor deficits, personalizing these models is necessary. Our group developed a novel framework that efficiently personalizes electromyography (EMG)-driven musculoskeletal models. The framework adopts a generic upper-limb musculoskeletal model as a baseline and uses an artificial neural network-based policy to fine-tune the model parameters for musculoskeletal model personalization. The policy was trained using reinforcement learning (RL) to heuristically adjust the musculoskeletal model parameters, maximizing the accuracy of estimated hand and wrist motions from EMG inputs. The results of human-involved validation experiments showed that, compared to the baseline generic musculoskeletal model, personalized models estimated joint motion with lower error in both offline (p < 0.05) and online tests (p = 0.014), underscoring the importance of model personalization. The RL-based framework was three orders of magnitude faster in tuning the model for optimized motion estimation compared to a traditional global search-based optimization method. This study presents a novel concept that combines human physical models and AI to transform generic models into user-specific ones effectively and efficiently. The personalized musculoskeletal models will be applied to inform stroke rehabilitation or neural control of prosthetic arms in the future.

Varun Nalam

vnalam@ncsu.edu

Faculty Sponsor: Helen Huang

Project Title: Inverse reinforcement learning based control for human-robot symbiosis in wearable robotics

Project Abstract

Inverse reinforcement learning based control for human-robot symbiosis in wearable robotics

Wearable robotics hold immense promise in restoring mobility for patients with disabilities stemming from amputations or neurological disorders. These devices offer the potential to significantly enhance the quality of life by enabling more natural and efficient movement. However, a major challenge in the field is the seamless integration of robotic systems with the human user, whose performance is often compromised. Achieving appropriate control of the robot is crucial to ensure that the device functions as an extension of the user’s body. Reinforcement learning (RL) based control has been successfully implemented and demonstrated in both prosthesis and exoskeletons, allowing for the personalization of robotic control to meet the unique needs of each individual user. Despite its successes, a critical aspect of RL in wearable robotics is the formulation of an appropriate cost function. The cost function guides the learning process by defining what constitutes “”success”” for the robotic control system, and in the context of human-robot interaction, this is particularly complex. The dynamic and intricate interactions between the human and the wearable robot make it difficult to establish a cost that is both practical and sufficiently representative of the user’s needs and goals.

To address this challenge, we proposed a bilevel optimization-based control approach that leverages inverse reinforcement learning (IRL) to determine the most appropriate cost function for each user. This inferred cost function is then used to guide the reinforcement learning process, enabling the robot to personalize its behavior according to the user’s specific requirements. We have implemented this approach on a lower limb prosthesis, demonstrating its effectiveness not only in restoring the function of missing joints but also in strengthening the weak residual joints of individuals with amputations. By accurately modeling the user’s preferences and optimizing the control strategy accordingly, our approach enhances the overall integration of the wearable robot with the user’s body. Ultimately, this method has the potential to significantly improve the autonomy and mobility of individuals with disabilities, offering them a higher degree of independence and a better quality of life.

Jimmy Tabet

jimmy_tabet@med.unc.edu

Faculty Sponsor: Shawn Gomez

Project Title: AI-Driven Innovations in Biomedical Research, Therapy Design, and Clinical Decision Support

Project Abstract

AI-Driven Innovations in Biomedical Research, Therapy Design, and Clinical Decision Support

In the Gomez Lab, our research focuses on developing artificial intelligence (AI) solutions to augment and enhance clinical decision-making across various biomedical domains, with the end-goal of enhancing patient outcomes. Our work applies AI across various areas, spanning from diagnostic tools to the rational design of novel therapies.

One example of our work is in the development of AI models to assist in breast cancer surgery. For instance, we have created deep learning models that analyze intraoperative specimen mammography images to predict whether tumor margins are clear of cancer cells. These models aim to help surgeons make more informed decisions during surgery, while still in the operating room, reducing the need for additional procedures and improving patient outcomes.

In addition to diagnostic tools, we are exploring more fundamental AI model development. As an example, we are working to improve knowledge representation approaches and have developed a Semantically-Aware Latent Space Autoencoder (SALSA) for drug discovery and development. SALSA is a novel methodology that enhances molecular representation in deep learning models by incorporating structural similarities between molecules into the model. This approach has the potential to improve the accuracy and relevance of predictions of how new drug compounds will interact at a molecular level and for rapid chemical similarity search. The underlying methodology can also be generalized to the study of other network structures such as cell signaling networks in disease for which we have developed other deep learning methodologies.

Furthermore, we are investigating the use of machine learning to predict how targeted therapies such as kinase inhibitors–a class of drugs increasingly used in cancer therapy–will affect different cancer cells and the surrounding tissue, either alone or in combination. By integrating data from large-scale kinome profiling and gene expression studies, our models can identify promising therapeutic regimes that are entirely novel or may have been overlooked by traditional empirical methods. These computational tools offer a more efficient pathway to developing effective cancer treatments while reducing toxicity and other undesired effects.

In summary, our research aims to bridge the gap between AI methodologies and practical applications in the clinic. We believe that our work has the potential to significantly impact clinical practice, from improving diagnostic accuracy to accelerating the discovery of life-saving therapies. We look forward to collaborating with others in the AI community to further these goals and to contribute to the development of integrated, AI-driven healthcare solutions.

Xiangming Xue

xxue5@ncsu.edu

Faculty Sponsor: Nitin Sharma

Project Title: Enhancing Tremor Suppression via AI-Driven Data Modeling and Afferent Stimulation Control

Project Abstract

Enhancing Tremor Suppression via AI-Driven Data Modeling and Afferent Stimulation Control

This project focuses on advancing tremor suppression techniques through the integration of artificial intelligence (AI) and ultrasound (US) technology, specifically targeting individuals suffering from Parkinson’s Disease (PD) and Essential Tremor (ET). By harnessing the power of Dynamic Mode Decomposition (DMD) within a data-driven modeling framework, we aim to refine and validate a system that not only predicts tremor dynamics with high precision but also actively controls tremor through afferent muscle stimulation (AMS).

Central to our approach is the use of a personalized AI-enhanced model that incorporates data from US sensors to continuously monitor and analyze muscle activity. This model leverages DMD to extract dynamic patterns from high-frequency US data, thus providing a novel method for understanding tremor behaviors in real-time. Coupled with this, we employ Koopman-based system identification and a model predictive control (MPC) scheme to adjust stimulation parameters dynamically, responding to the patient-specific tremor characteristics observed.

The primary hypothesis of our research is that this multi-faceted, AI-driven model significantly improves the accuracy of tremor predictions compared to conventional methods. Moreover, it optimizes the effectiveness of afferent stimulation protocols used to suppress tremors. We anticipate that our AMS control strategy, which allows for the precise tuning of stimulation based on real-time data, will provide superior tremor suppression outcomes.

In preliminary experiments, we employed this model to simulate tremor dynamics and control in a clinical setting. Using US imaging to capture muscle movements and an inertial measurement unit (IMU) to record wrist motions, we developed comprehensive profiles of tremor behaviors under various conditions. These profiles were then used to train our model, enhancing its predictive capabilities. Notably, our findings have shown a significant reduction in normalized root mean square errors (NRMSE) for both wrist angle and angular velocity predictions when using our AI-driven model, with improvements compared to traditional one-step prediction models. This research not only underscores the potential of AI in medical diagnostics and treatment but also highlights the interdisciplinary application of AI technologies in enhancing quality of life for individuals with movement disorders.

This validation study not only seeks to affirm the efficacy of our innovative model but also to demonstrate its scalability and adaptability in real-world clinical settings. By successfully marrying AI with clinical practices, we aim to set new standards in the treatment of tremors, providing a robust, reliable, and patient-centered solution that could be adapted for a range of neuromuscular conditions.

This project exemplifies the transformative potential of AI in healthcare, particularly in the development of personalized medicine and the management of chronic conditions. It is poised to offer significant contributions to health informatics, biomedical engineering, and neuroengineering, promising to revolutionize how tremor and other movement disorders are treated in clinical environments.

Chemical and Biomolecular Engineering

Jeffrey Bennett

jabenne4@ncsu.edu

Faculty Sponsor: Milad Abolhasani

Project Title: Autonomous Catalyst Optimization for In-Flow Hydroformylation

Project Abstract

Autonomous Catalyst Optimization for In-Flow Hydroformylation

Machine learning (ML) and flow chemistry are being leveraged in chemical reaction engineering to optimize complex processes as well as to reduce time-to-discovery and enhance development. Recently, there has been a sizable increase in the use of ML algorithms to both optimize and model complex reaction systems. ML algorithms can help to rapidly identify patterns in high-dimensional reaction spaces, predict reaction outcomes, and optimize operating conditions. These data-driven approaches help to effectively leverage the high-throughput data generated by self-driving lab approaches.

One of the primary applications of ML in reaction engineering is in the development of predictive response models. These models can be used to form a digital twin of the chemical reaction and predict process outcomes, reducing the number of experiments needed to achieve the desired reaction outcome through optimization, lowering the cost and time associated with catalyst development. After the initialization and optimization campaign, the trained model can be investigated for fundamental knowledge of the effects of process variables on reaction outcome.

Flow chemistry is another powerful tool that can reduce the time and cost associated with the discovery and development of chemical processes. Automated flow reactors can provide superior control over the reaction conditions, minimize human error, intensify transport rates, and improve process consistency. Furthermore, automation can be coupled to in-line analysis for monitoring and characterization of the reaction in real-time, providing feedback to adjust the reaction conditions and optimize the reaction process in a closed-loop format.

In this work, we extend our recent efforts of reaction condition optimization in a self-driving flow reactor to include discrete variables such as ligand structure as well as continuous process parameters. Extending the scope of the optimization to include ligand structure allows for the identification of an optimal ligand species given a ligand library and hydroformylation reaction target. The automated reactions in parallel batch and flow are leveraged alongside the ML-based experimental selection to perform autonomous regioselectivity tuning and optimization as well as developing a molecular structure-informed digital twin of the reaction.

Michael Bergman

mbergma2@ncsu.edu

Faculty Sponsor: Carol K. Hall

Project Title: Computational Discovery of Plastic-Binding Peptides for Microplastic Pollution Remediation

Project Abstract

Computational Discovery of Plastic-Binding Peptides for Microplastic Pollution Remediation

Micro- and nanoplastics (MNPs), defined as plastics on the micrometer or nanometer scale, are a concerning pollutant. Millions of tons of MNPs pervade the environment. This results in humans and other organisms unintentionally consuming MNPs, which can negatively impact health. It thus is urgent to develop methods for remediating MNP pollution. Noting that polypeptides can adsorb strongly to polymeric materials, we aim to computationally discover plastic-binding polypeptides (PBPs) to help detect, capture, and/or biodegrade MNP pollution. We focus in particular on the plastics polyethylene, polystyrene, polypropylene, and PET, which are common components of MNP pollution. To discover PBPs, we first used Peptide Binding Design (PepBD), a peptide design program based on biophysical modeling. PepBD sampled 105-106 peptides per plastic to identify a set of potential PBPs for each plastic. We next searched for additional PBPs by training a machine learning (ML) model on PepBD data. The ML model had two components: one that predicts peptide affinity (i.e., a PepBD score) based on the peptide’s amino acid sequence, and a second that optimizes the amino acid sequence with guidance from the score predictor. Specifically, the score predictor was LSTM while the sequence generator was Monte Carlo Tree Search. The tunability of the MCTS reward function allowed us to simultaneously optimize multiple peptide properties, giving rise to PBPs that also have high predicted solubility in water and bind with greater specifically to a target than to a non-target plastic. Measurement of peptide affinity in both molecular dynamics simulations and atomic force microscopy shows that both PepBD and ML PBPs have higher affinity to plastic than random peptides, and that ML designs have greater affinity to plastic than PepBD designs. We thus believe that the discovered PBPs can help develop bio-based methods that address MNP pollution.

Sina Jamalzadegan

sjamalz@ncsu.edu

Faculty Sponsor: Qingshan Wei

Project Title: Smart Agriculture: Early Detection of Plant Diseases Using Wearable Sensor Data and Machine Learning

Project Abstract

Smart Agriculture: Early Detection of Plant Diseases Using Wearable Sensor Data and Machine Learning

The United Nations predicts that by 2050, the global population will reach 10 billion, necessitating a 60% increase in food productivity to ensure food security. One of the primary threats to this goal is plant diseases, which result in crop losses ranging from 20 to 40% annually. These losses not only diminish food production but also have far-reaching consequences, including impacts on biodiversity, socioeconomic stability, and the cost of disease control, which together undermine global food security.

To address these challenges, innovative technologies are being developed within the realm of smart agriculture, such as wearable plant sensors. Our research focuses on a novel multimodal wearable sensor attached to the lower surface of plant leaves. This sensor continuously monitors plant health by tracking both biochemical and biophysical signals, as well as environmental conditions. The sensor platform integrates the detection of volatile organic compounds (VOCs), temperature, and humidity—critical indicators of plant stress and disease.

In addition to the wearable sensor technology, we have developed an unsupervised machine learning (ML) model using principal component analysis (PCA) designed to analyze the multichannel real-time data collected by the sensors. This model has shown promising results in the early detection of plant diseases, particularly the tomato spotted wilt virus (TSWV), which it can identify as early as four days post-inoculation. Furthermore, our analysis reveals that the accurate detection of this virus requires a combination of at least three sensors, with VOC sensors being crucial for reliable predictions.

This integration of advanced wearable sensor technologies with sophisticated machine learning techniques represents a significant step forward in smart agriculture. By enabling early detection of plant diseases, this system offers the potential to mitigate crop losses, reduce the costs associated with disease management, and contribute to more sustainable global food systems. Through innovations like these, we aim to support the global push towards increased food productivity and secure a more resilient future for agriculture.

Moritz Woelk

mwoelk@ncsu.edu

Faculty Sponsor: Wentao Tang

Project Title: Data-driven State Observation by Kernel Canonical Correlation Analysis

Project Abstract

Data-driven State Observation by Kernel Canonical Correlation Analysis

The difficult nature of modeling the states of chemical processes stems from the intrinsic nonlinearity within system dynamics. Deriving models that accurately represent the internal states of nonlinear processes is challenging due to the complexity of the governing equations, making the process both time-consuming and computationally expensive. In order to derive and reduce governing equations, knowing the system’s internal states is a prerequisite. Therefore, constructing a state observer, specifically an auxiliary dynamical system that uses the system’s inputs and outputs to estimate the internal states, allows for the inference of the system’s behavior without requiring direct measurements of those internal states. By solely utilizing accessible system data, the states’ information can be determined by finding the relationship between past and future input and output measurements.

Thus, kernel canonical correlation analysis (KCCA) is adopted in this work for the reconstruction of a state sequence from a nonlinear dynamical system that incorporates both past and future data, circumventing the need for physics-informed models. Specifically, by using a nonlinear kernel function, which maps the data into a feature space, we employ canonical correlation analysis (CCA) to find a subspace from two datasets (i.e., past and future input and output measurements) with maximal correlation. Based on the formulation using Mercer’s Theorem and the kernel trick, an appropriate form of regularization can be further added by adopting a least squares support vector machine (LS-SVM) approach with KCCA. In this formulation, the squared error is minimized with regularization on the weight vectors. This regularized KCCA is a convex optimization problem, which results in solving a generalized eigenvalue problem in the dual space. This approach is amenable to artificial intelligence (AI) through the utilization of machine learning techniques (such as kernel methods and support vector machines) to learn a reconstruction of the states by finding relationships between two datasets, resulting in a representation that closely matches the true states. The application to a numerical example and exothermic continuously stirred reactor (CSTR) case study demonstrates that KCCA is a sufficient, performance-guaranteed, model-free state observer, provided adequate data.

Jinge Xu

jxu49@ncsu.edu

Faculty Sponsor: Milad Abolhasani

Project Title: Smart Manufacturing of Metal Halide Perovskite Nanocrystals Enabled by a Multi-Robot Self-Driving Lab

Project Abstract

Smart Manufacturing of Metal Halide Perovskite Nanocrystals Enabled by a Multi-Robot Self-Driving Lab

All-inorganic Metal halide perovskite (MHP) quantum dots (QDs) have emerged as a highly promising class of semiconducting nanomaterials for various solution-processed photonic devices. These quantum-confined nanocrystals exhibit unique optical properties that can be precisely engineered by altering their composition, shape, size, and geometry(1). The surface ligation of MHP QDs relies on an acid-base equilibrium reaction, which is commonly utilized not only to provide colloidal stability in organic solvents but also to tune their optical properties. The use of different organic acids as surface ligands leads to distinct growth pathways and thereby different QD morphologies(2). Consequently, the optical characteristics of MHP QDs are strongly affected by both the ligand structure (discrete parameter) and the precursor concentrations (continuous parameters). The multidimensional nature of this parameter space makes it extremely challenging to efficiently explore and understand the underlying mechanism of synthesis. Traditional synthesis methods for MHP QDs are time-consuming, material-intensive, and laborious, relying on manual flask-based techniques. The manual nature of these methods, along with the interdependent reaction and processing parameters in colloidal QD synthesis, hinders the discovery of optimal formulations and fundamental understanding of MHP QDs(3).

In this work, we have developed a multi-robot self-driving lab (SDL) for accelerated synthesis space mapping of room temperature-synthesized colloidal semiconductor nanocrystals. The developed SDL enables automated and closed-loop investigation of the effects of ligand structure and precursor concentrations on the photon-conversion efficiency, nanocrystal size uniformity, and bandgaps of MHP QDs. Next, we utilized the developed multi-robot SDL to autonomously map the pareto-front of MHP QDs’ optical properties for multiple target peak emission wavelengths.

We overcame challenges of conventional applied and fundamental QD research by investigating the science and engineering of a modular intelligent robotic experimentation platform. We established a closed-loop QD synthesis and development strategy by integrating a modular robotic experimentation platform with data-driven modeling and experiment-selection algorithms. The developed SDL accelerated mapping the optical properties of MHP QDs to the ligand structures and synthesis conditions and understanding the underlying role of ligand structure on the shape, morphology, and optical properties of MHP QDs. The SDL-generated knowledge will enable on-demand synthesis of MHP QDs with optimal optical properties for the next generation energy and display technologies.

Civil, Construction and Environmental Engineering

Ange Therese Akono

aakono@ncsu.edu

Faculty Sponsor: Ange Therese Akono

Project Title: Novel recycled aggregate concrete using nanomaterials and AI

Project Abstract

Novel recycled aggregate concrete using nanomaterials and AI

The use of recycled concrete aggregates for new concrete production is an important avenue to increase the sustainability of concrete. While numerous studies have demonstrated the benefit of nanosilica on recycled aggregate concrete, a connection between the enhanced properties and the properties of the calcium-silicate-hydrates is unproven. Thus, using AI, we investigate how nanosilica modifies the distribution of calcium-silicate-hydrates in fine recycled concrete aggregate mortar. Using an interdisciplinary approach of nanomechanical testing, data science, and advanced chemical, microstructural and pore structure characterization, we find that 12 wt.% nanosilica results in a 30.8% increase in the combined relative amount of high-density and ultra-high-density C-S-H, 16.7% reduction in C-S-H gel porosity, 24.4% reduction in total porosity, and 3.9% increase in fracture toughness.

Kichul Bae

kbae2@ncsu.edu

Faculty Sponsor: Sankar Arumugam, Ranji Ranjithan

Project Title: AI-based Flood Prediction: Role of ML in Water Depth and Flow Estimation from Camera Images

Project Abstract

AI-based Flood Prediction: Role of ML in Water Depth and Flow Estimation from Camera Images

Early warning of flooding, which helps minimize loss of life and property, requires integration of different physics-based models (e.g., atmospheric models, watershed models and urban stormwater models) and current water level and streamflow conditions to forecast spatio-temporal variations in flooding. Combining the quantitative model predictions with site-specific experiential knowledge from past flood events enables an artificial intelligence (AI)-based flood prediction framework to provide targeted flood warnings for the community. A major challenge is finding water level and streamflow data at local scale to capture community-level flood inundation. Stream gauges, which are sparsely distributed, provide data only at the river basin scale that are inadequate to capture flood inundation especially in urban areas. Fortunately, ubiquitous video images are available (e.g., from cameras used for traffic monitoring, public surveillance and home security) as potential sources for extracting water level data. We are developing a machine learning (ML) approach, using convolution neural networks, to first associate the inundation features in the images with the limited stream water level and flow data. These ML-models utilize city-wide camera images to estimate water level and flow at an urban extent. This data can also be used to calibrate and validate the physics-based watershed and stormwater models to correct systematic errors associated with physics-based models and enhance the flood warnings for locations with limited flood data. Initial investigations based on thirteen locations with USGS stream gauges in Mecklenburg county in NC show potential in utilizing camera images in the proposed approach to issue city-wide flood warnings.

Lochan Basnet

lbasnet@ncsu.edu

Faculty Sponsor: Kumar Mahinthakumar, Ranji Ranjithan

Project Title: Role of Machine Learning Approaches in Localizing Pipe Leaks in Water Distribution Systems (WDSs)Distribution Systems

Project Abstract

Role of Machine Learning Approaches in Localizing Pipe Leaks in Water Distribution Systems (WDSs)

Leak localization is critically important for water utilities worldwide, as the significant loss of treated water each year results in substantial economic, infrastructural, and environmental impacts. However, addressing leak localization remains challenging due to the prohibitive costs of physical solutions and the limitations associated with data-driven, model-based approaches, exacerbated by limited data availability. While the simulation of hydraulic models has mitigated data limitations, further advancements are required in both the conceptualization and formulation of the leak localization problem, as well as in algorithmic development. A review of the current state of research reveals that most model-based studies, although promising, rely on simplifying assumptions that do not adequately capture the complexities of real-world leak localization in water distribution systems (WDSs). These assumptions hinder the practical application of these methodologies in addressing actual leaks.

Our research aims to provide a data-driven leak localization solution that holistically examines the leak complexities, focusing on various critical aspects such as the expansiveness of WDSs, leak characteristics, uncertainties, leak characterization resolution, and the integration of multi-input data. At the core of our approach are machine learning (ML) algorithms, which have demonstrated their efficacy across numerous domains, including WDSs. Considering the wide array of ML algorithms available, our selection of three established ML models—Multilayer Perceptron (MLP), Convolutional Neural Networks (CNN), and Transformers—offer an adequate space for exploration and comparison. Our ML-based approach for comprehensive leak localization was tested on a large-scale WDS in a major metropolitan area of California under both simulated and real-world leak scenarios. The results are highly promising, with high accuracy achieved in both simulated and real settings.

Gongfan Chen

gchen24@ncsu.edu

Faculty Sponsor: Edward Jaselskis

Project Title: Meet2Mitigate: An LLM-Powered Framework for Real-Time Issue Identification and Mitigation from Construction Meeting Discourse

Project Abstract

Meet2Mitigate: An LLM-Powered Framework for Real-Time Issue Identification and Mitigation from Construction Meeting Discourse

Construction meetings are essential for bringing together project participants to coordinate efforts, identify problems, and make decisions. Previous studies on meeting analysis relied on manual approaches to identify isolated pieces of information but struggled with providing a high-level overview that targeted real-time problem identification and resolution. Despite the rich discussions that occur, the sheer volume of information exchanged can make it difficult to discern key issues, decisions, and action items. Recent advancements in large language models (LLMs) provide sophisticated natural language processing capabilities that can effectively distill essential information and highlight actionable insights from meeting transcripts. However, these technologies are often underutilized in practice, despite their potential to significantly enhance the analysis and management of meeting data. This research introduced the Meet2Mitigate (M2M) framework, which integrates cutting-edge technologies, including speaker diarization, automatic speech recognition (ASR), LLMs, and retrieval-augmented generation (RAG) to revolutionize how construction meetings are captured and analyzed. In this framework, construction meeting recordings can be converted into a structured format, differentiated by timestamps, speakers, and corresponding contents. Different speakers’ dialogues are then summarized to extract the main project-related issues. For quick mitigation responses, this framework combines LLMs with a retrieval mechanism to access the Construction Industry Institute (CII) Best Practices (BPs) knowledge pool, generating detailed action items to drive problem solving. The validation results demonstrated that the M2M prototype can automatically generate a tailored end-to-end problem-to-solution report in real-time by only using a meeting recording file. The resulting problem-to-solution reports can be promptly shared with project teams, including non-attendees, ensuring an effective meeting recap and quick alignment on decisions among the teams.

Panji Darma

pndarma@ncsu.edu

Faculty Sponsor: Moe Pourghaz

Project Title: Imaging Biological Healing in Concrete Vasculature Network Using Hybrid Electromagnetic Method and Convolutional Neural Network

Project Abstract

Imaging Biological Healing in Concrete Vasculature Network Using Hybrid Electromagnetic Method and Convolutional Neural Network

Microbially Induced Calcium Carbonate Precipitation (MICP) is an innovative bio-based technique that has gained significant attention for its potential to enhance the healing and durability of concrete. This process utilizes specific bacteria, such as S. Pasteurii, which precipitate calcium carbonate (CaCO₃) as a byproduct of their metabolic activities, filling cracks and improving structural integrity. However, the efficiency and effectiveness of MICP rely on a comprehensive understanding of the spatial and temporal dynamics of CaCO₃ precipitation within the concrete matrix. To monitor the MICP process, we propose a novel imaging approach that combines Ground-Penetrating Radar (GPR) and Electrical Impedance Tomography (EIT), enhanced by Convolutional Neural Networks (CNN). GPR is a well-established method for detecting subsurface cracks in concrete through reflection waves, while EIT effectively visualizes biological activity via conductivity changes. By integrating these imaging techniques with CNN, we aim to achieve high-resolution, real-time visualization of the biological healing process within concrete vasculature networks. Our approach combines these techniques with CNN in a three-stage process: (a) identifying subsurface cracks using GPR reflection waves and training the CNN to generate a 3D geometry of the cracks; (b) performing planar EIT finite element computation based on the subsurface crack geometry provided by the CNN; and (c) monitoring the MICP process using planar EIT. This approach promises to improve the monitoring, optimization, and overall effectiveness of MICP in concrete, paving the way for more durable and sustainable construction materials.

Shiqi Fang

sfang6@ncsu.edu

Faculty Sponsor: Sankar Arumugam

Project Title: A Complete Density Correction of GCM Projections Using Normalizing Flows

Project Abstract

A Complete Density Correction of GCM Projections Using Normalizing Flows

Global Climate Models (GCMs) provide essential information for impact analysis, adaptation pathways, and prediction studies. However, GCMs often contain systematic errors that require correction against observations before their use in impact assessments, especially for application studies. Traditional univariate bias corrections based on quantile mapping do not preserve cross-relations across multiple variables. Despite recent advances in multivariate bias correction methods which perform better than univariate methods, fully correcting the joint density of multiple variables from GCM projections still remains challenging. In this study, we propose an AI-based density correction method, normalizing flow (NF), to correct precipitation (P) and near-surface maximum air temperature (T) from CMIP6 projections. NF offers several advantages over traditional methods, including the ability to model complex, multidimensional dependencies and transform the distribution of variables to match the joint probability density function (PDF) of observed data. We assess the performance of NF-corrected CMIP6 projections over the historical period (1951–2014) across the continental US, focusing on the ability to reproduce inter-variable relationships and spatio-temporal consistency. Results indicate that NF effectively corrects biases in the joint density of CMIP6 projections and also preserves spatial patterns and cross-correlation between variables. Compared to other methods such as MACA, quantile mapping, and canonical-correlation analysis, NF best matches the PDF of observations with the smallest Wasserstein distance both spatially and temporally for P and T, and better quantifies extreme events compared with observations. NF also demonstrates consistently strong performance across different seasons and climate regions. Thus, NF could not only bias-correct moments in observed variables but also correct the joint density of climate variables from CMIP6 projections, thereby showing promise in improving the estimation of hydroclimatic extremes using GCM projections.

Krishna Mohan Ganta

gkrishn5@ncsu.edu

Faculty Sponsor: Jacqueline MacDonald Gibson

Project Title: ASSESSING PERFORMANCE OF A HYBRID MECHANISTIC GROUNDWATER / MACHINE-LEARNED BAYESIAN NETWORK (MLBN) MODEL

Project Abstract

ASSESSING PERFORMANCE OF A HYBRID MECHANISTIC GROUNDWATER / MACHINE-LEARNED BAYESIAN NETWORK (MLBN) MODEL

To better manage emerging public health challenges, communities, water utilities, and regulators must improve methods to assess and prioritize environmental health risks. PFAS exposure in North Carolina is a prime example where methodologies for predicting and mitigating risks are developing concurrently with contaminant regulations and the allocation of mitigation funding. This project aims to predict the risk of private wells exceeding the provisional health goal for the PFAS GenX. PFAS compounds are notoriously difficult to model with mechanistic groundwater flow and fate and transport models. The sheer number of different PFAS chemicals and uncertainty in their individual and interacting characteristics, all make them complex to model in the environment. Mechanistic models are also resource-intensive to develop and calibrate. This project builds upon previous work that developed a Machine-Learned Bayesian Network (MLBN) classification model to predict at-risk wells; current work integrates outputs from a mechanistic groundwater fate and transport model as input variables to new MLBNs, classified as low-, medium-, and high-effort models in terms of mechanistic modeling resources required. Performance of each model is compared to the mechanistic model predictions of at-risk wells using several performance metrics, including accuracy, area under the receiver operating characteristic curve (AU-ROC) and F-score curves, and the importance of each metric and model performance is discussed in the context of environmental health risks. Results show that MLBNs perform as well as the mechanistic models in accuracy and AU-ROC performance metrics, while being more robust in terms of the range of decision thresholds selected for risk classification. High-effort models make slight improvements in AU-ROC metrics, while more easily incorporating insights from mechanistic model performance without the need to recalibrate the mechanistic model. The project aims to assist regulators to advance public health and methodologies to integrate traditional engineering models with machine-learning approaches.

Timothy Leung

tleung@ncsu.edu

Faculty Sponsor: Jacqueline MacDonald Gibson

Project Title: Targeting Homes with High Lead Exposure Risks by Leveraging Big Data and Advanced Machine-Learning Algorithms

Project Abstract

Targeting Homes with High Lead Exposure Risks by Leveraging Big Data and Advanced Machine-Learning Algorithms

Although improvements to lead exposure have been quite significant in recent decades, there is a current misconception that lead exposure is no longer a health issue due to its elimination from many products. The World Health Organization still identifies lead as one of the top 10 chemicals of public health concern among workers, children, and women of reproductive age as of 2023. While the prevalence of elevated blood lead levels is gradually declining, it has been estimated that every 1 in 3 children is not screened and reported for elevated blood lead at both the state and federal levels. Even among adults, the accumulation of lead exposures from the home and work environment can lead to reduced heart, brain, and kidney functions down the road.

Past and existing efforts to mitigate or eliminate household lead exposures still face several challenges. Currently, Pb remediation in the U.S. is often triggered by the detection of elevated Pb in a child’s blood. Relying on children as sentinels of Pb exposure risk means that remediation may come too late, after the damage is done. In addition, it fails to prevent lower levels of exposure, which may not increase blood Pb enough to require an inspection but may nonetheless increase the risk of cognitive damage. Due to the complexity of multi-media lead exposure routes, it is difficult to identify and prioritize areas within the home that may have varying levels of lead. Hazard identification approaches based on simple thresholds of house age and/or census-level demographic information fail to capture opportunities that arise from integrating multiple data sources. When combined systematically, integrated data sources can reveal important interactions among risk factors that may have synergistic effects.

This project applies a precision healthy housing approach where statistical and machine learning models are trained to better predict which houses are at risk and which interventions are likely to be most effective against these context-specific hazards. Results of preliminary models trained on big datasets curated from tax parcel, demographic, and physical/environmental data sources in NC, demonstrate a marked improvement in true positive rate (50 to 80%) over existing lead risk criteria in identifying individual homes for remediation, with fewer resources wasted on false positives, and without relying on children’s BLL to trigger remediation after exposure. BLL test year, year of home construction, median census block group household income, percent of block group identifying as Black, number of drinking water system connections, and child age are some of the most influential variables in predicting lead risk. Next steps are to identify the optimal model performance, subset of input variables required, risk threshold, and data resolution required to achieve these risk prediction improvements.

Jessica Levey

jrlevey@ncsu.edu

Faculty Sponsor: Sankar Arumugam

Project Title: Subseasonal-to-Seasonal Streamflow Forecast

Project Abstract

Subseasonal-to-Seasonal Streamflow Forecast

Climate change is altering global hydroclimatology and threatening the majority of the world’s fresh water sources. In addition to climate change, population and land use change are stressing water sources and will amplify the water scarcity crisis. Rivers are dammed to create reservoirs, which serve various purposes including water supply, flood control, hydropower, and irrigation. Reservoir releases are modified during droughts and floods to meet water demand without increasing downstream risk. Mismanagement of water resources may threaten agriculture and food supply chains, human and environmental health, and regional economies. Accurate streamflow forecasts is essential for effective water resource management and planning. Most reservoirs are operated by only considering short-range inflow forecasts, 1-7 days lead time, which is important for determining daily releases to meet the daily water supply and energy demands and as for managing flash flood and storm-caused flood events. Reservoir operations do not consider forecasts at the subseasonal-to-seasonal (S2S) range, 15-90 days ahead. S2S forecasts are important energy grid planning, meeting storage demands for irrigated agriculture, drought effect mitigation, and maintaining long term storage and flow conditions for meeting ecological demands. This study a) develops a large-scale framework using a multivariate long short term memory (LSTM) model t0 predict S2S streamflow forecasts for large-scale water resource management and b) evaluates the forecast performance for below normal, normal, and above normal flows. As hydrologic extremes are becoming more frequent and intense, accurate, longer-range forecasts will be crucial for meeting water demand and effectively managing water resources.

Harleen Kaur Sandhu

hksandhu@ncsu.edu

Faculty Sponsor: Abhinav Gupta

Project Title: Digital Twin for Nuclear Piping Systems: Harnessing Deep Learning for Advanced Condition Monitoring

Project Abstract

Digital Twin for Nuclear Piping Systems: Harnessing Deep Learning for Advanced Condition Monitoring

Advanced nuclear reactors represent a significant breakthrough in energy technology due to their compact design, enhanced robustness, superior reliability, and high capacity factors. These reactors are particularly suitable for deployment in remote areas or regions affected by disasters, providing a critical and dependable power supply in challenging conditions. Their high efficiency and reduced greenhouse gas emissions make them a viable choice for sustainable energy production. To facilitate widespread adoption, it is important to minimize life-cycle maintenance costs while ensuring the long-term structural integrity of the reactors.

Recent advancements in artificial intelligence (AI) and deep learning algorithms have made Digital Twin (DT) technology a practical solution for the autonomous operation of advanced nuclear reactors. Enhanced DT frameworks present a cost-effective solution for the continuous monitoring of system health, particularly in safety-critical components such as piping and equipment systems. These systems play a vital role in the efficient transfer of coolant within nuclear facilities. Yet, they are susceptible to flow-accelerated corrosion and erosion, which can cause thinning of pipe walls and, if unchecked, lead to critical infrastructure failures.

Traditional non-destructive testing (NDT) methods, while effective, are labor-intensive and often necessitate the shutdown of power plants, resulting in substantial financial impacts on electric utility companies. The integration of AI-driven DTs with advanced condition monitoring capabilities allows for real-time analysis of sensor data, providing early warnings of degradation and enabling proactive maintenance. Machine learning algorithms, particularly those trained on large datasets, are adept at detecting subtle signs of structural damage in piping systems, thereby reducing reliance on costly NDT equipment and decreasing the frequency of plant outages required for inspections.

This research proposes a diagnostic digital twin for nuclear piping systems, including their design, construction, and continuous condition monitoring throughout their operational life. During the design and construction phases, digital tools are developed to facilitate the seamless integration between Building Information Modeling (BIM) software and Finite Element (FE) structural design software, ensuring accurate virtual compatibility checks and proper installation fit. The condition monitoring framework leverages AI-based methodologies to detect and quantify degradation, such as pipe wall thinning due to flow-accelerated corrosion (FAC), which occurs as a result of the transportation of high-temperature and high-pressure fluids in nuclear power plants.

A laboratory-based demonstration of an “as-built” diagnostic digital twin is conducted, focusing on a piping system subjected to conditions representative of real-world nuclear facilities. This demonstration involves both simulated and empirical data to track aging and degradation over time. The case study includes a laboratory installation where the piping system undergoes controlled vibrations to develop a robust diagnostic workflow. Deep learning algorithms, including Multi-Layer Perceptrons (MLPs) and Convolutional Neural Networks (CNNs), are evaluated for their diagnostic accuracy and computational efficiency. Additionally, advanced data processing techniques, such as domain randomization, are employed to enhance the generalization and reliability of the AI models. By integrating AI with DT technology, this research aims to aid the maintenance and operation of advanced nuclear reactors, ensuring their safety, efficiency, and longevity.

Liannian Wang

lwang64@ncsu.edu

Faculty Sponsor: Kevin Han, Abhinav Gupta

Project Title: Advancing Construction Requirements Management through Generative AI-Driven Workflow

Project Abstract

Advancing Construction Requirements Management through Generative AI-Driven Workflow

“Construction engineering, particularly for large-scale projects like nuclear power plants, is inherently complex and requires strict adherence to high-quality and safety standards. Ensuring that construction activities align with approved designs and standards is crucial for safeguarding the facility, the environment, and human life. However, 98% of megaprojects experience an average cost overrun of 80% and a delay of 20 months, with ineffective management of construction requirements being a critical factor. The current documentation-based manual processes are labor-intensive, time-consuming, and prone to errors and omissions, leading to significant vulnerability in cost overruns and delays. While advanced technologies exist, they are often unsuitable or difficult to apply to most construction projects, requiring further optimization. These challenges highlight the urgent need for innovative solutions incorporating advanced technologies, particularly artificial intelligence (AI), to streamline processes and democratize intelligence in construction projects.

This research proposes a transformative approach to construction requirements management by integrating generative AI (GenAI) into the workflow. The goal is to shift from manual and error-prone practices to data-driven automated systems, thereby enhancing the efficiency and accuracy of managing construction requirements. GenAI, with its streamlined model-building and training process, offers an accessible and cost-effective alternative to traditional construction requirements management, making intelligence more accessible to a broader range of users.

The GenAI integrated process begins by developing a well-structured and domain-specific ontology. This ontology is the foundation for the AI’s understanding of construction-specific terminology and relationships, providing a significant framework that ensures accurate, consistent, and contextually relevant outputs. By guiding the AI’s interpretation of domain-specific contexts, the ontology enables GenAI to deliver precise results that align with industry standards. Unlike other methods that require extensive domain expertise and technical knowledge, the ontology-supported GenAI approach is more adaptable and scalable. It allows for the seamless conversion of extensive requirement documents into structured inputs for the GenAI system, significantly speeding up the process and reducing the risk of errors typically associated with manual documentation practices. Then, to support this workflow, a user-friendly platform will be developed to facilitate collaboration among project stakeholders, ensuring smooth data transfer and interaction. Moreover, the GenAI capabilities will be extended to analyze project performance data, providing stakeholders with informed decision-making support through clear and accessible reporting mechanisms. This comprehensive workflow aims to enhance construction requirement management and project performance using advanced AI technology, fostering effective stakeholder collaboration, and delivering real-time insights to practitioners. By expediting decision-making and improving project outcomes, this approach envisions a future where AI-driven intelligence becomes a universal asset in the construction industry, making advanced technological support accessible and beneficial to all stakeholders involved.”

Computer Science

Muhammad Alahmadi

mjalahma@ncsu.edu

Faculty Sponsor: Dongkuan Xu

Project Title: Reliable Synthetic Data Generation

Project Abstract

Reliable Synthetic Data Generation

The emergence of Large Language Models (LLMs) represents a major advancement in Artificial Intelligence (AI). These models can generate synthetic data, which plays a crucial role in boosting LLM performance and facilitating the training of smaller models, particularly when high-quality datasets are limited or unavailable. One key factor in the effectiveness of synthetic data is diversity, which is essential for enhancing the robustness and generalization capabilities of the models. However, despite the significant role of data diversity in improving model performance, more comprehensive research needs to be conducted focused on optimizing methods for data diversification and developing evaluating measures of data diversity. This gap in research highlights the need for more in-depth studies to better understand and refine diversification techniques to improve synthetic data quality and model outcomes.

My research addresses the existing gap by critically evaluating current methods for data diversification and the metrics used to assess diversity in synthetic datasets. It examines the effectiveness of existing diversification strategies and proposes innovative approaches to advance data generation techniques. Key areas of exploration include the integration of Retrieval-Augmented Generation (RAG) and the investigation of the impact of seed corpus diversity and selection processes on synthetic data generation. Additionally, my research aims to incorporate methodologies and concepts from related fields, such as data distillation and active learning. These aspects are underexplored in the literature and represent promising avenues for enhancing the quality of synthetic data.

To accurately evaluate the effectiveness of diversification methods, it is essential to develop robust assessment techniques for data diversity. These techniques should extend beyond the commonly studied lexical diversity to include the semantic dimension, with particular emphasis on its relevance to the specific task at hand, which could result in varying semantic spaces depending on the context of the task and the data.

The domain of my research is in textual datasets and benchmarks which are used to assess language understanding capability of the model. Robustness, adaptability, and generalization of the models are important in building reliable and trustworthy AI models. Increased reliability will ensure consistent performance across diverse tasks, while advancements in self-awareness and self-improvement mechanisms may facilitate greater model autonomy and adaptability.

By addressing the limitations of current synthetic data generation methods and emphasizing data diversity, my research seeks to develop more capable and generalizable AI models. Such improvements are vital for overcoming challenges related to data scarcity and quality, thus advancing toward the realization of Artificial General Intelligence (AGI), where AI systems exhibit the capability to perform a wide array of tasks, continuously learn, and autonomously evolve.

Adrian Chan

amchan@ncsu.edu

Faculty Sponsor: Chau-Wai Wong

Project Title: Handwritten Text Recognition (HTR) for Historical Arabic Documents

Project Abstract

Handwritten Text Recognition (HTR) for Historical Arabic Documents

Handwritten text recognition (HTR) involves taking an image that contains handwritten text and converting it into the corresponding characters on a computer. HTR is a longstanding challenge in computer vision and machine learning due to the significant variability in handwriting styles among individuals. Our project focuses on HTR for Arabic handwriting, which comes with additional challenges compared to HTR for English handwriting. Arabic handwriting is written in cursive, context-sensitive, and features connected characters, complex ligatures, and diacritics. Furthermore, the availability of Arabic handwriting datasets is limited compared to English datasets, further increasing the difficulty of training generalizable HTR models. This work proposes a transformer-based neural network to address the unique challenges of transcribing historical Arabic handwriting. We show that image and text preprocessing modifications, synthetic datasets, model pretraining, and overtraining can help adapt state-of-the-art English HTR models to Arabic. We evaluated our proposed model with other Arabic recognition methods using character error rate (CER). Experiments show that our proposed HTR method outperforms all other methods with a CER of 8.6% on the largest publicly available handwritten Arabic text curated by our team.

LiChia Chang

lchang2@ncsu.edu

Faculty Sponsor: Dongkuan (DK) Xu

Project Title: Diagnostic Chatbot for SAP/APM Manufacturing Based on Agentic LLM: A Real-World Industry Application

Project Abstract

Diagnostic Chatbot for SAP/APM Manufacturing Based on Agentic LLM: A Real-World Industry Application

Large Language Models (LLMs) have rapidly gained prominence across various fields, including automated manufacturing domains. In the context of SAP (Systems, Applications, and Products) and Application Performance Management (APM), which are widely used tools that help businesses manage finance, logistics, and supply chain, LLMs offer significant potential. These systems manage terabytes of numeric and textual log data generated from by sensors and human operators within manufacturing environments. Traditionally, diagnosing issues from such data has required the expertise of several data scientists and domain specialists with strong background knowledge, who manually analyze each problem. Although some machine-learning techniques have been developed to analyze such data, several limitations persist. For example, the results generated by these techniques often require interpretation by analysts, as deriving meaningful insights from the data can be challenging without deep domain knowledge. This reliance on expert interpretation can hinder the efficiency and accessibility of automated data analysis and problem shooting in manufacturing environments. Moreover, traditional natural language processing techniques lack a comprehensive solution for handling massive textual data. These techniques are often limited to basic keywords extraction and string matching, which are insufficient for capturing the full complexity of textual messages in industrial contexts.

To harness the capability of LLM-empowered tools, we developed a free-form question-answering chatbot built on an Agentic LLM system, utilizing Synergizing Reasoning and Acting (ReAct) criteria. This system is integrated with custom-defined tools to address the nature of APM/SAP data and a wide range of inquiries from workers in the manufacturing environment. Concretely, user inquiries can be categorized into four types: questions that do not require any contextual information, questions that necessitates database retrieval, questions that require semantic search, and malicious queries aimed at obtaining protected information through prompt injection attacks. To address these types of inquiries, the Agentic LLM is integrated with specialized tools, each tailored to solve a specific category of question. These tools include: a LLM-based SQL translator which operates with the full SAP/APM database schema provided in the system prompt, a SQL engine that executes queries and returns the retrieved results, Retrieval-Augmented Generation (RAG) for semantic search, and a LLM-based final checker to ensure that the final response does not contain unrelated or confidential content. All credit for this work goes to PrometheuseGroup, Headquarters – Raleigh, NC.

Teddy Chen

xchen87@ncsu.edu

Faculty Sponsor: Shiyan Jian, Dongkuan Xu

Project Title: Unveiling Implicit Bias in LLM-Generated Educational Materials: A Prompt-Based Approach with BiasViz

Project Abstract

Unveiling Implicit Bias in LLM-Generated Educational Materials: A Prompt-Based Approach with BiasViz

Large Language Models (LLMs) are increasingly utilized in educational settings for tasks like personalized learning, content generation, and assessment. However, these models often perpetuate implicit biases rooted in their training data, including gender, geographic, and social biases, which can subtly influence educational outcomes. Traditional bias detection methods focus on explicit biases, often relying on predefined lists of stereotypes. However, implicit biases—those that operate unconsciously—remain a significant challenge and can lead to unequal learning experiences. This project proposes a novel prompt-based approach to uncover and measure implicit bias in LLM-generated educational content. We will design a dataset of stereotype-laden prompts, subtly embedding information through names, contexts, and cultural references, and use it to evaluate widely-used LLMs like GPT-4 and LLaMA. By analyzing LLM outputs for bias in scenarios such as writing assistance and grading, we aim to provide a more comprehensive understanding of how these models

function and where they may falter.

To support our research, we have designed and developed BiasViz, an innovative interactivetool that allows users to visualize, analyze, and mitigate biases in LLM-generated content.Initially developed as a prototype focused on gender bias, BiasViz can be easily expanded tostudy broader bias scenarios, including geographic and social biases. The prototype hasalready been deployed at BiasViz (https://llm-biasviz.teddysc.me/), enabling studentsand researchers to explore biases through dynamic visualizations, such as next-wordprobability and biased phrase highlighting, making the often opaque nature of LLMs moretransparent. The tool also allows users to create counter-narratives and dynamically linksdetected biases to their sources within the training data. Additionally, BiasViz providesfunctionality for bias quantification and mitigation strategies, drawing on frameworks likeIBM AI Fairness 360. By incorporating BiasViz into our research, we aim to empower users to

actively participate in the process of bias identification and correction, contributing to the development of fairer and more inclusive educational AI systems. This project is guided by Dr. DK Xu from the Department of Computer Science at NC State, Dr. Shiyan Jiang from the College of Education at NC State, and Dr. Duri Long from the School of Communication at Northwestern University, all experts in AI fairness, educational technology, and human-centered computing.

Aldo Dagnino

adagnin@ncsu.edu

Faculty Sponsor: Aldo Dagnino

Project Title: Condition Monitoring of Hydro-power Turbines in IIOT Power Generation Plants

Project Abstract

Condition Monitoring of Hydro-power Turbines in IIOT Power Generation Plants